What are the differences, and how can you ensure your staff use them in a compliant and secure manner?

Fordway recently attended an industry event where we met and discussed current issues and future strategy with a selection of IT leaders. One of the key areas of interest, unsurprisingly, was AI, and more particularly, how and where Microsoft’s recently announced Copilot AI assistants fit with the better known, publicly available AI engines such as Open AI’s Chat CPT, Alphabet/Google’s Bard and Meta/Facebook’s LLaMA.

They also expressed concerns how they could ensure their users and corporate data remain secure and compliant when using these tools to aid their productivity.

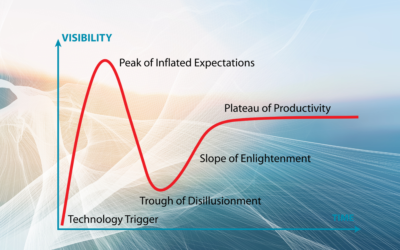

The simple answer is that whilst they are all based on AI technology, Copilot is different. When using ChatGPT, Bard or LLaMA, you are effectively using a ‘super search engine’.

The difference between these and traditional search engines, such as Google, Bing and DuckDuckGo, is that in response to a query such as ‘how to brew beer’, the traditional search engines will provide you with a list of the highest ranking web pages on the topic according to their algorithms.

The AI models will provide you with a summary of the equipment needed and the brewing process based on the data on that subject available from the training data it was created from.

Whilst the exact data, sources and the algorithms used to analyse, sort and rank that data to provide the answers are closely guarded commercial secrets, an educated guess suggests that OpenAI has primarily been trained across the general Internet, Bard is mostly based on Google search data and LLaMA is primarily trained on Facebook and Instagram data.

An answer to the ‘how do we ensure our users compliance?’ question, is that some of the training material to get to certain answers will almost certainly be subject to copyright. If your user reproduces the answers based on that copyrighted material and makes it publicly available, you are potentially at risk from legal action by the copyright holder.

This is a key issue over the increasing use of AI, which is a long way from being resolved, and was a factor in the recent Hollywood writer’s strike. Another issue Governments and the AI industry must confront is recognising and identifying what text and images have been generated by AI rather than by humans, such as deepfakes, which well above most of our pay grades to try and resolve.

Apart from Bing Copilot, which uses the publicly accessible ChatGPT models to improve the responses to general search queries, Microsoft’s Copilot AI assistants for Microsoft 365 and Windows 11 are structured AI models specific to Microsoft applications, services and capabilities for organisations’ own internal use.

The models have been developed and trained to help with specific common tasks such as ‘optimise my PC to best practices’, ‘summarise this Teams group chat for me’, or ‘create a sales proposal for our key product to this customer’.

Copilot searches, processes and uses your organisations’ data to provide the responses to these queries, which are specific to your organisation and user based on what they can access.

This is one of the reasons why before implementing Copilot you need to ensure that your users access rights and privileges are correctly configured and assigned to ensure they are not using data they should not be able to access to provide the answers.

By Richard Blanford

CEO and founder, Fordway

Want to learn how to make best use of these new technologies?

Our team of Microsoft experts can help you to understand how to implement AI into your IT strategy and boost productivity in your organisation.